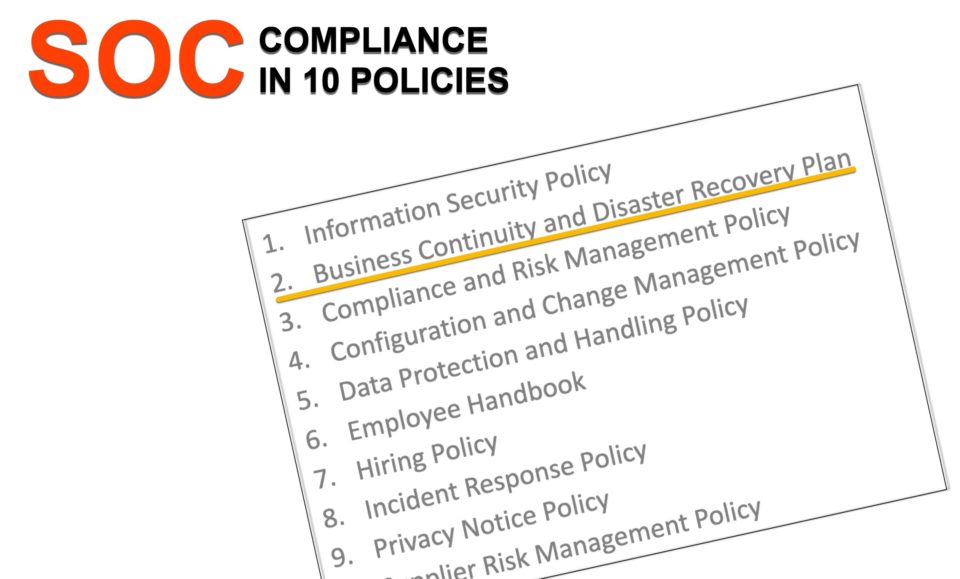

This post is the second installment in our series on achieving SOC-2, where we peel back the covers on what it took for us to get compliant. We’re walking through the 10 policies that our company adopted for SOC-2, and dedicating a blog post to what each entailed.

The first installment of the series focused on our information security policy. This post covers the business continuity and disaster recovery plan.

SOC-2 requires that you’ve thought through how to protect your business from any kind of disaster — everything from natural disasters to cyber events to the loss of a critical employee — and that you’re periodically testing that capability.

The good news for us was that our product is a disaster recovery solution for AWS. We manage disaster recovery for our own business continuity plan with a best-in-class solution that understands what it looks like to protect the full environment at the platform layer. This is what our business is about.

That said, any DR plan accounts for 2 categories of disaster scenarios:

- The failure of infrastructure, and the ability to recover your service on alternate infrastructure. Commonly, this alternate infrastructure needs to be far away so that large-scale events (like natural disasters) can’t undermine both the primary and recovery infrastructure.

- A cyber compromise of your production environment, such as a ransomware attack or a disgruntled employee doing something unfathomable. To account for these disasters, it’s critical to maintain backups in an immutable manner where they can’t also be destroyed by an attacker.

We account for the first category by running Arpio as a “multi-region-active” workload across 3 regions of AWS. There are about 2500 miles of separation between these regions. And because we’re spread across regions (not just availability zones), we’re resilient to the failure of regional services, like the 7-hour outage in the us-east-1 region the other day.

The second category is accounted for by moving data backups out of our production AWS account. The best practice is to maintain a second AWS account that you don’t grant any access to, and store your backups there. The bad actor that compromises production can’t also compromise this environment.

The next hurdle was DR testing, but we were in pretty good shape there as well. We’ve run quarterly disaster recovery drills from day one. These drills have never been ‘tabletop.’ We bring up the entire system, validate that it’s all working, and then tear it down. Its speed and efficiency is thanks to the extensive automation of our solution.

On the people side, we already had good redundancy thanks to our process for cross-training our colleagues. But we needed to formalize how we, as a group, would communicate with each other in the event of a disaster. Our team communication is typically facilitated in Slack, but if you recall the Facebook outage on October 4th, 2021, sometimes an outage takes down your communication tools as well. That lesson was fresh as we were planning here.

We went old school to solve this. We created a formal contact list including real-life phone numbers (yes! phone numbers!) to ensure connectivity to every person on the team in the event of a disaster. We then posted and shared that contact list and communicated where, when, and how to access it.

The documented policy itself came primarily from Laika. We tweaked 20% of it, but the majority of it is standard or boilerplate and didn’t require much work.